How To Run

coTestPilot.ai

coTestPilot.ai

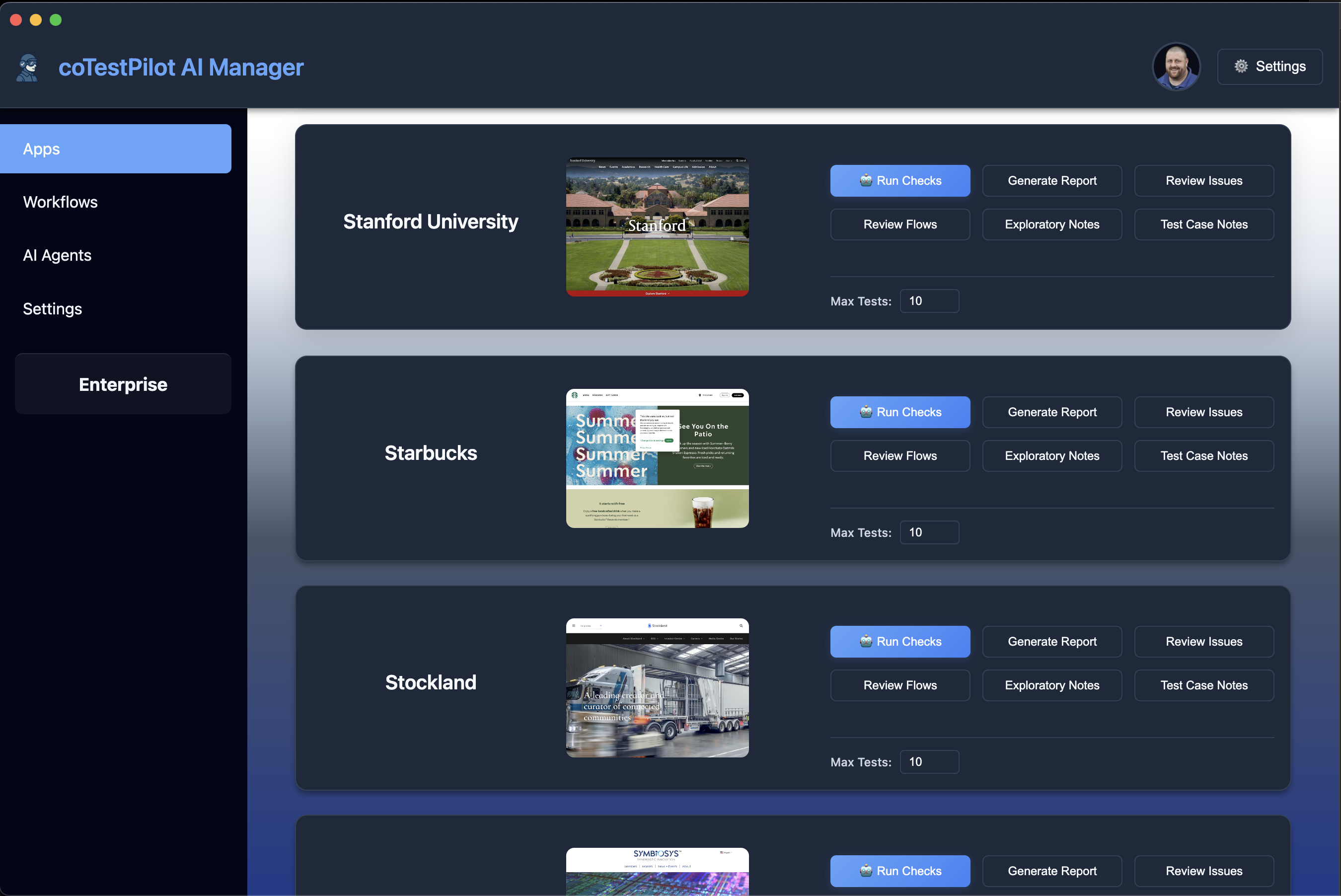

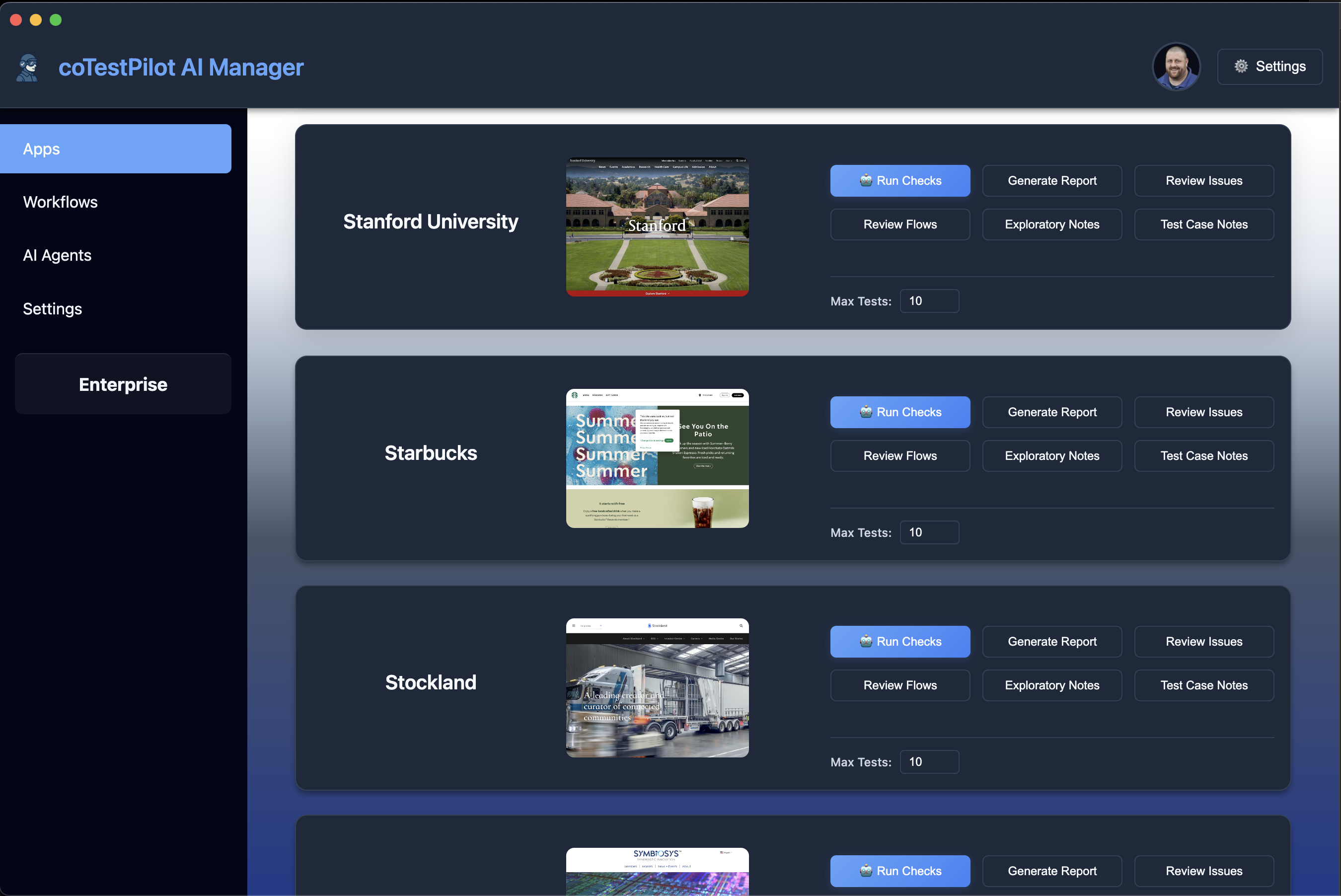

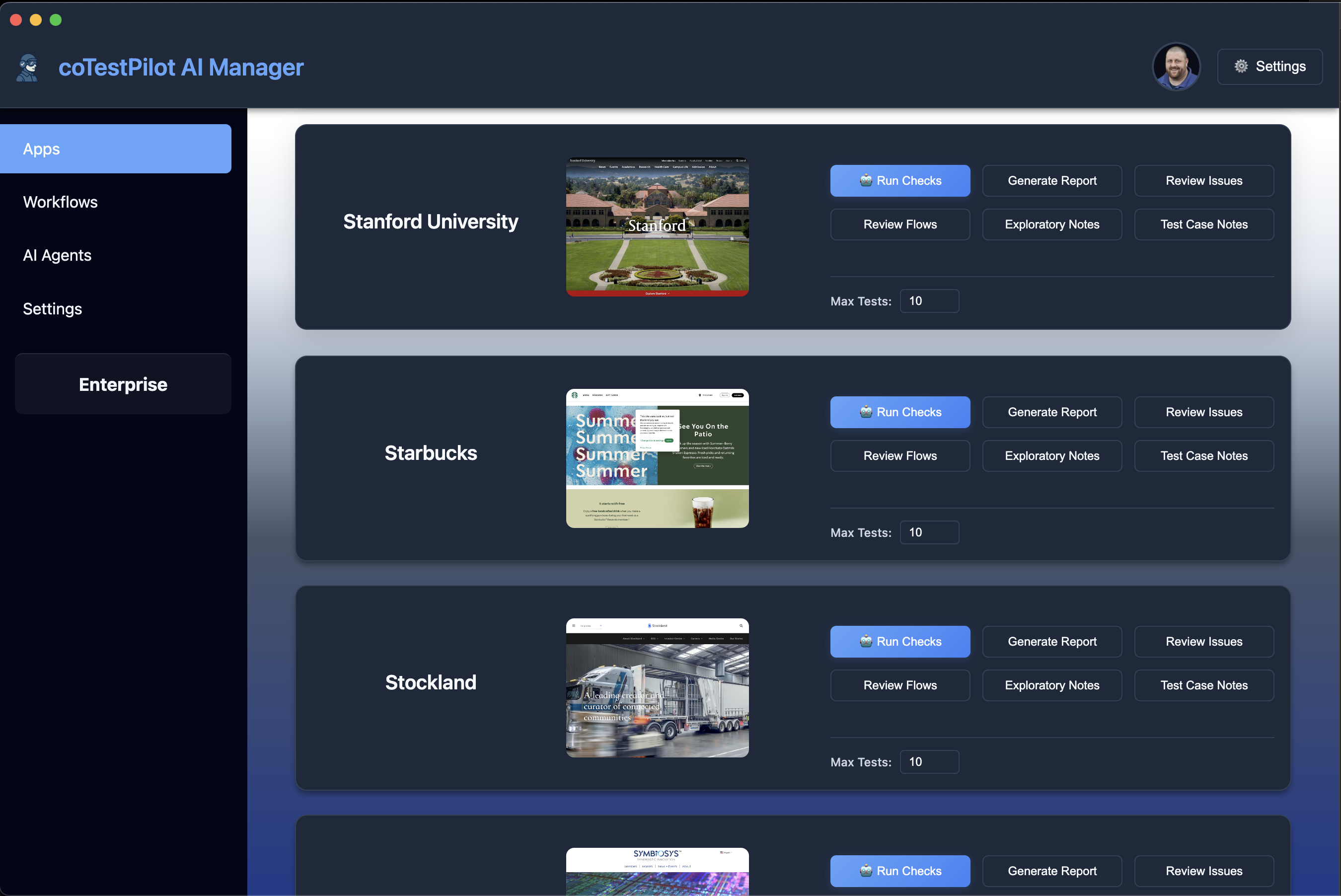

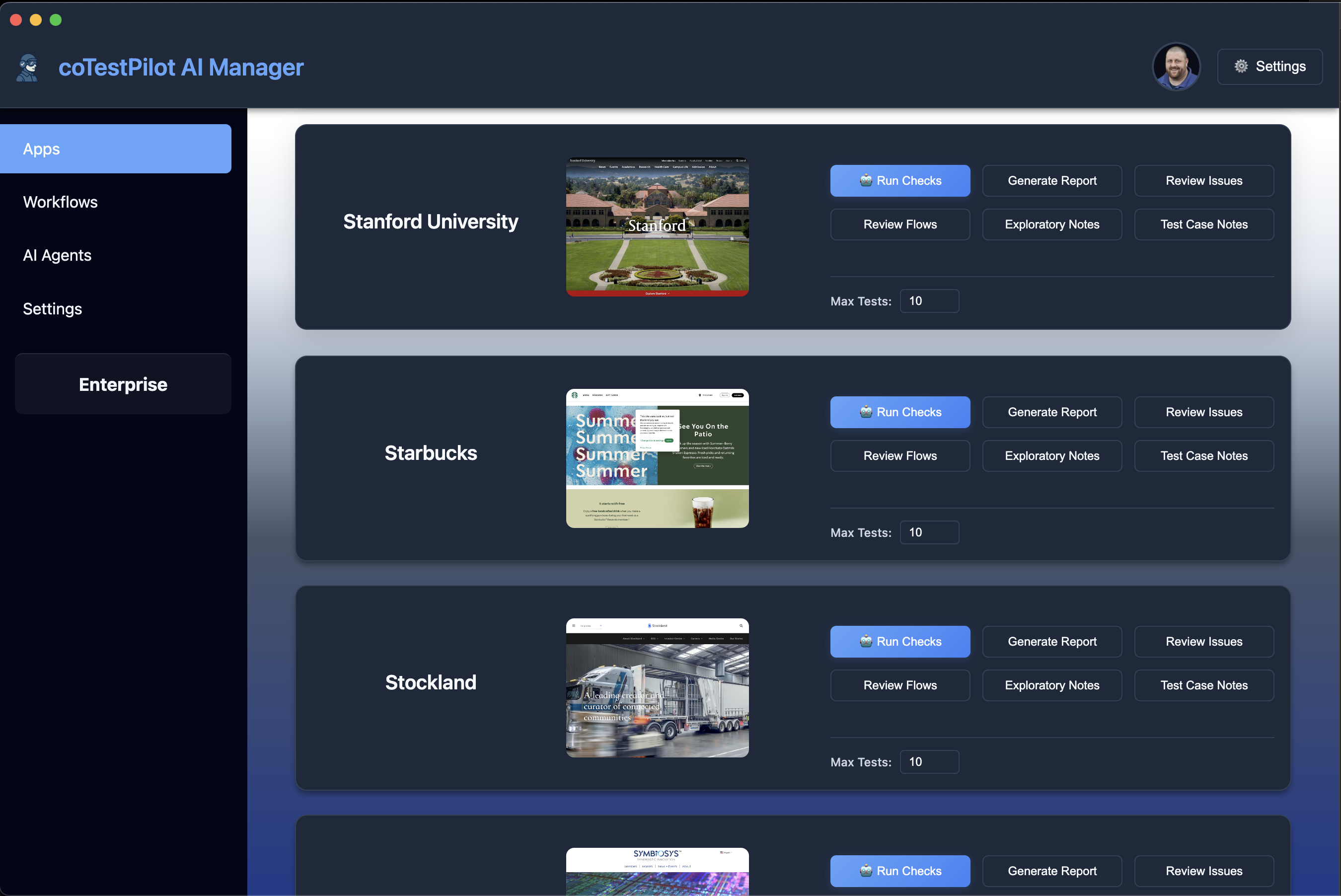

Step #1: Launch the testersapp

Start by launching the testersapp to begin your AI-powered testing journey.

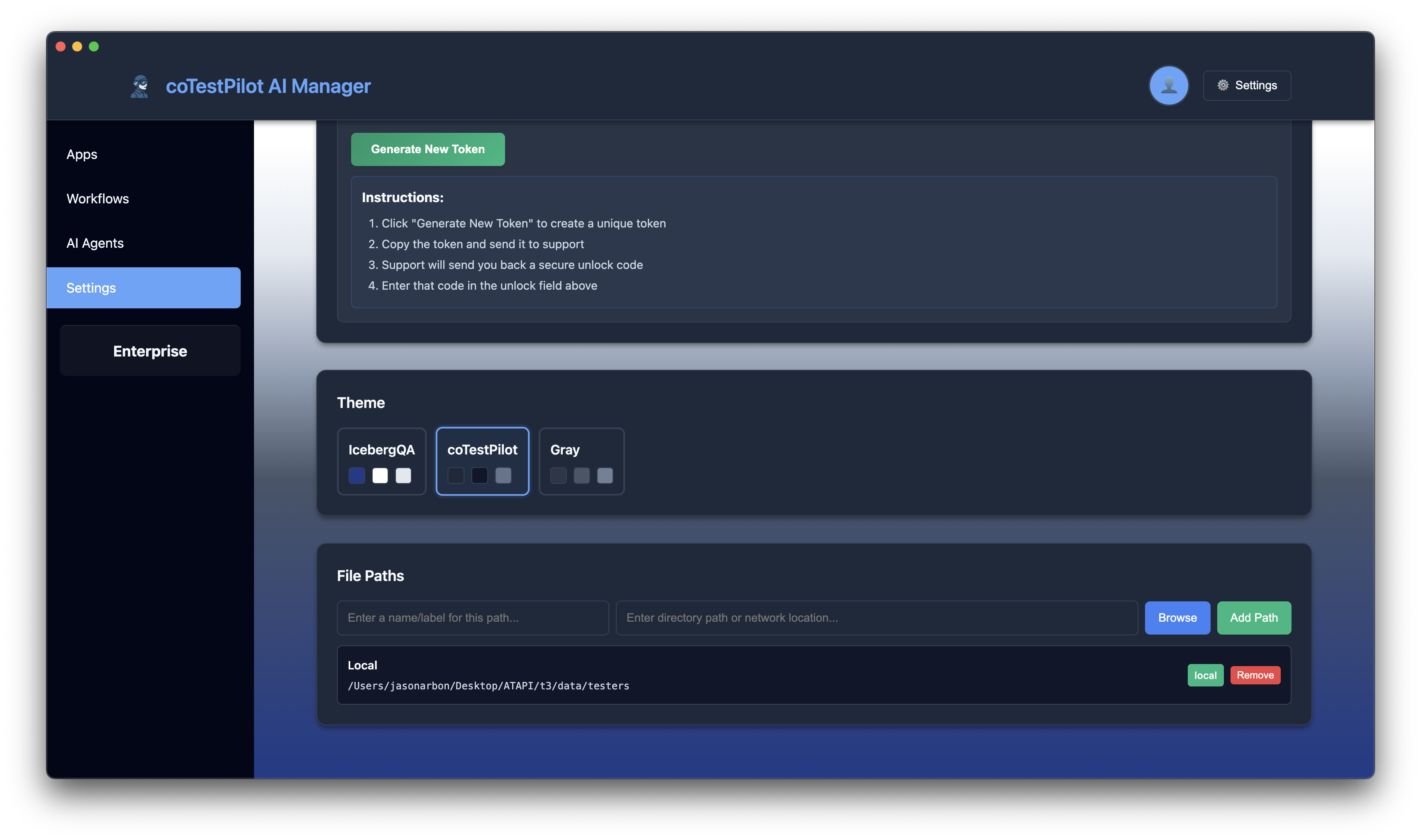

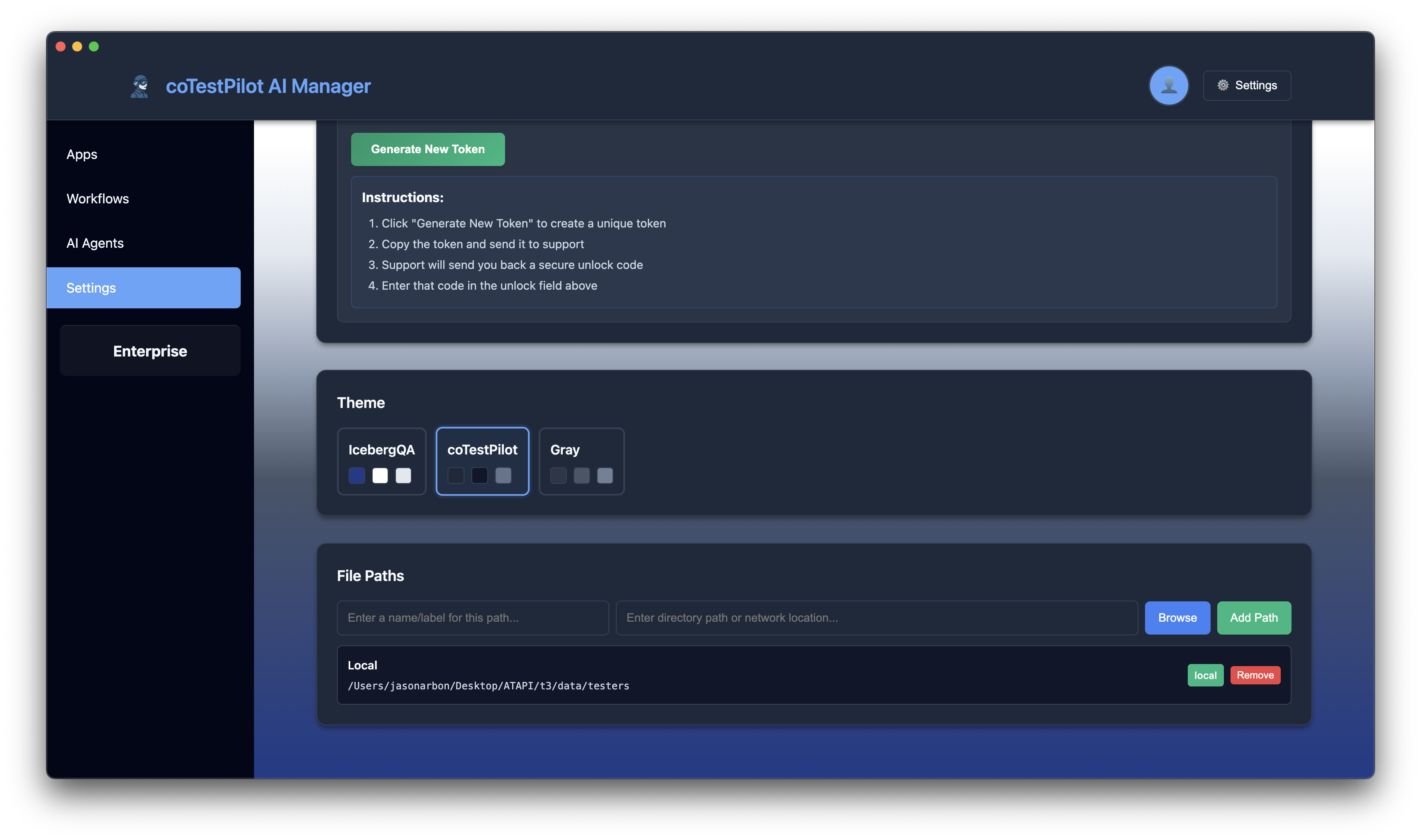

Step #2: Set path to testers app

Configure the path to your testers app from testers.ai in the settings.

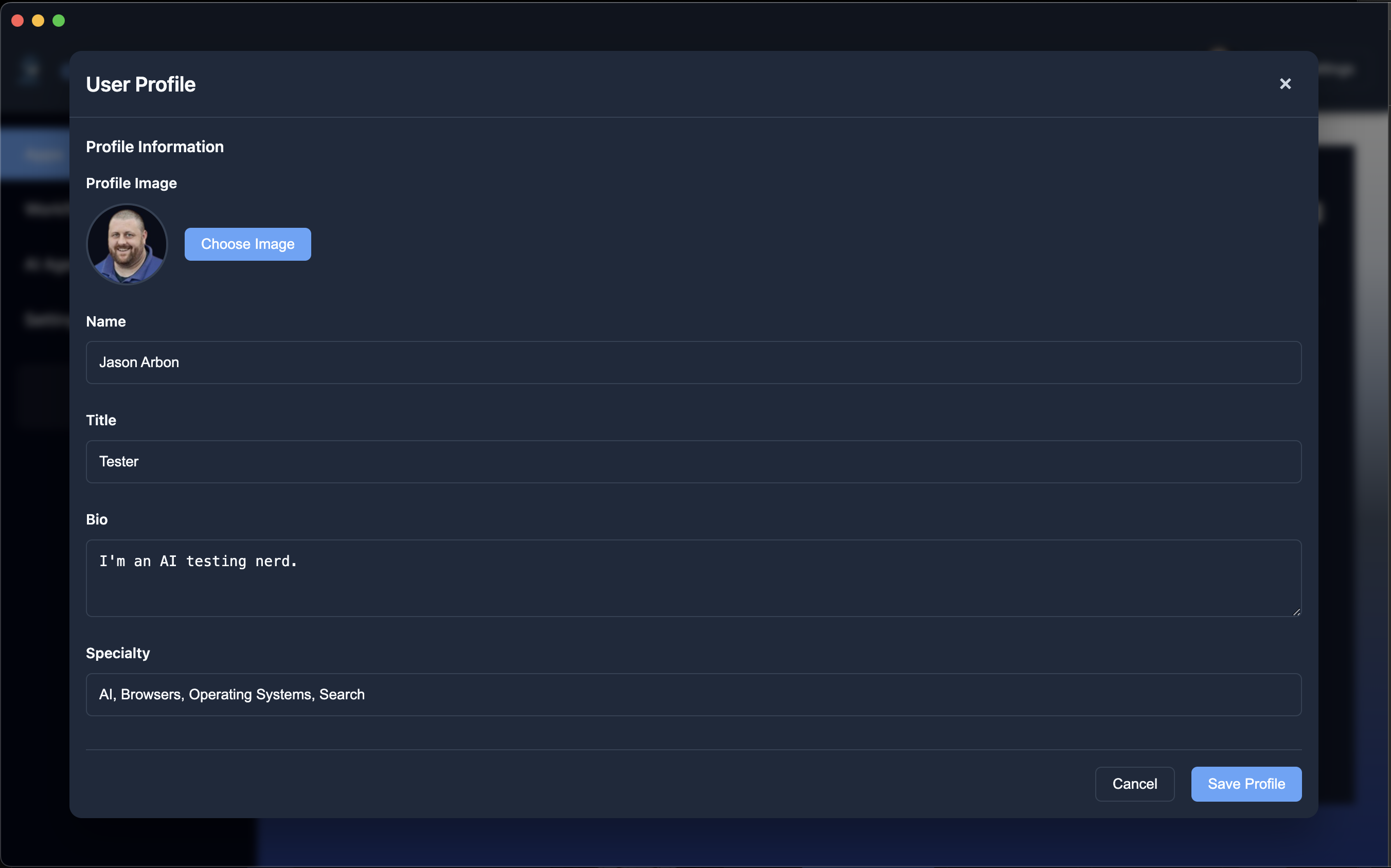

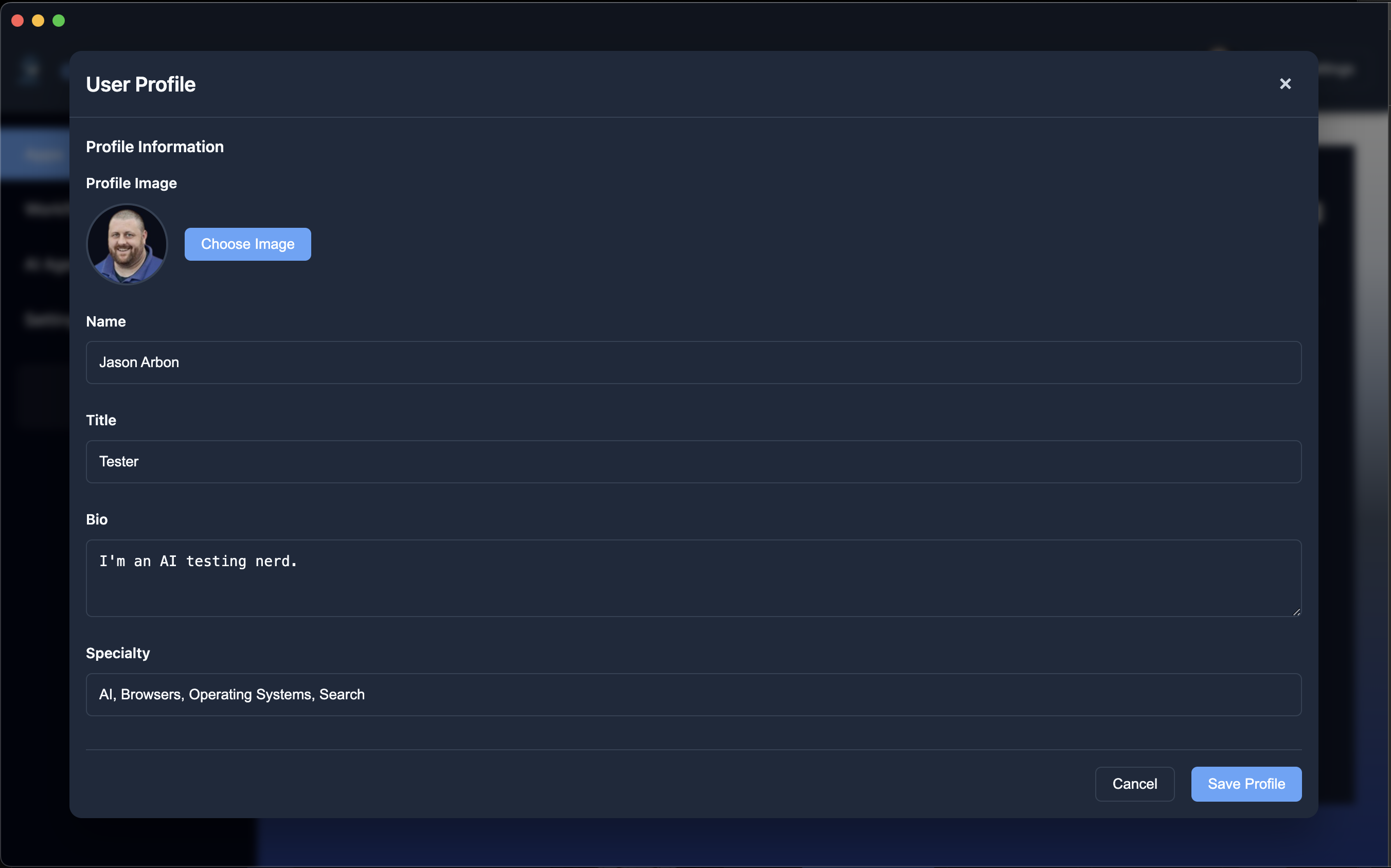

Step #3: Edit user profile

Edit your profile - all ratings and comments will be recorded as coming from this human expert tester profile.

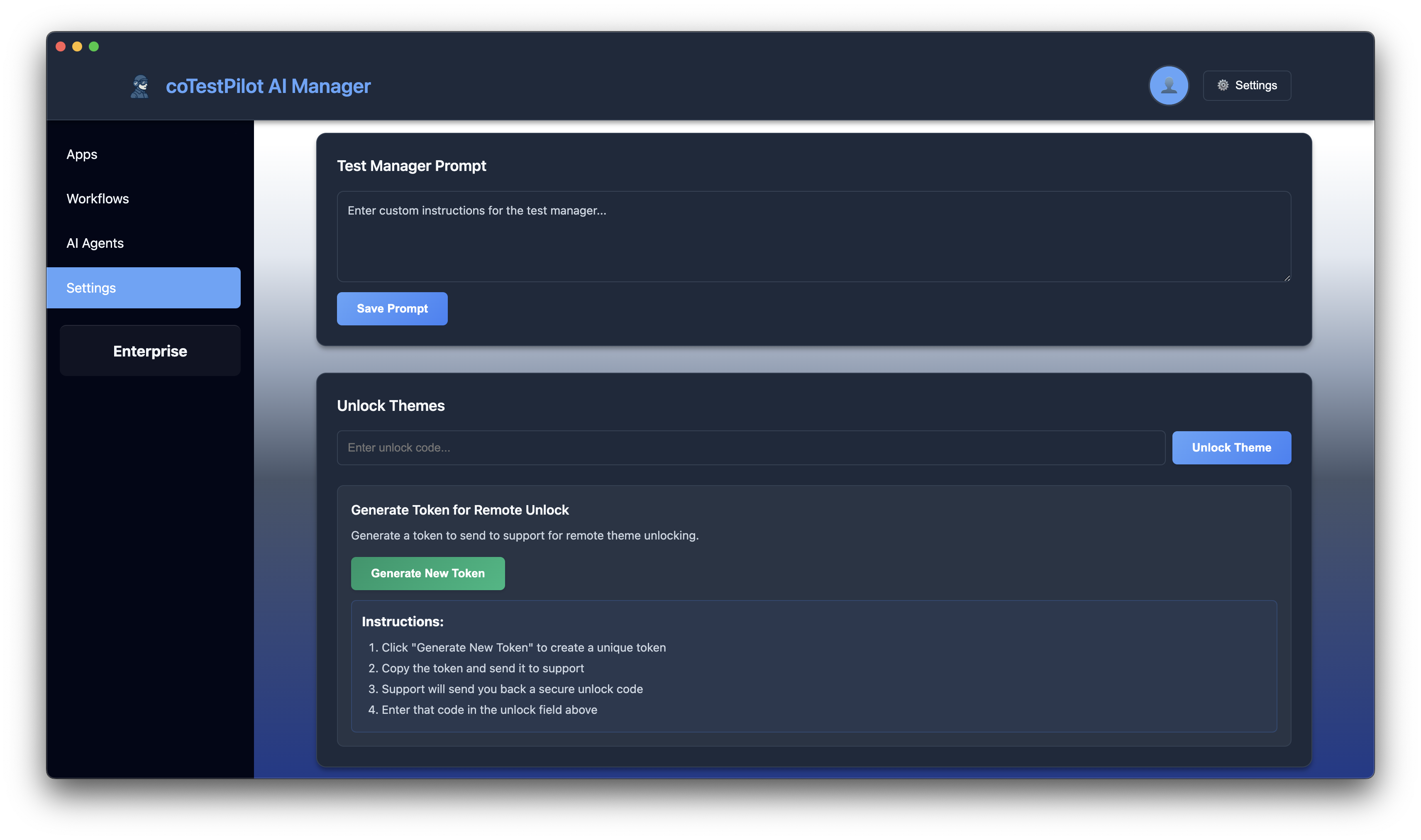

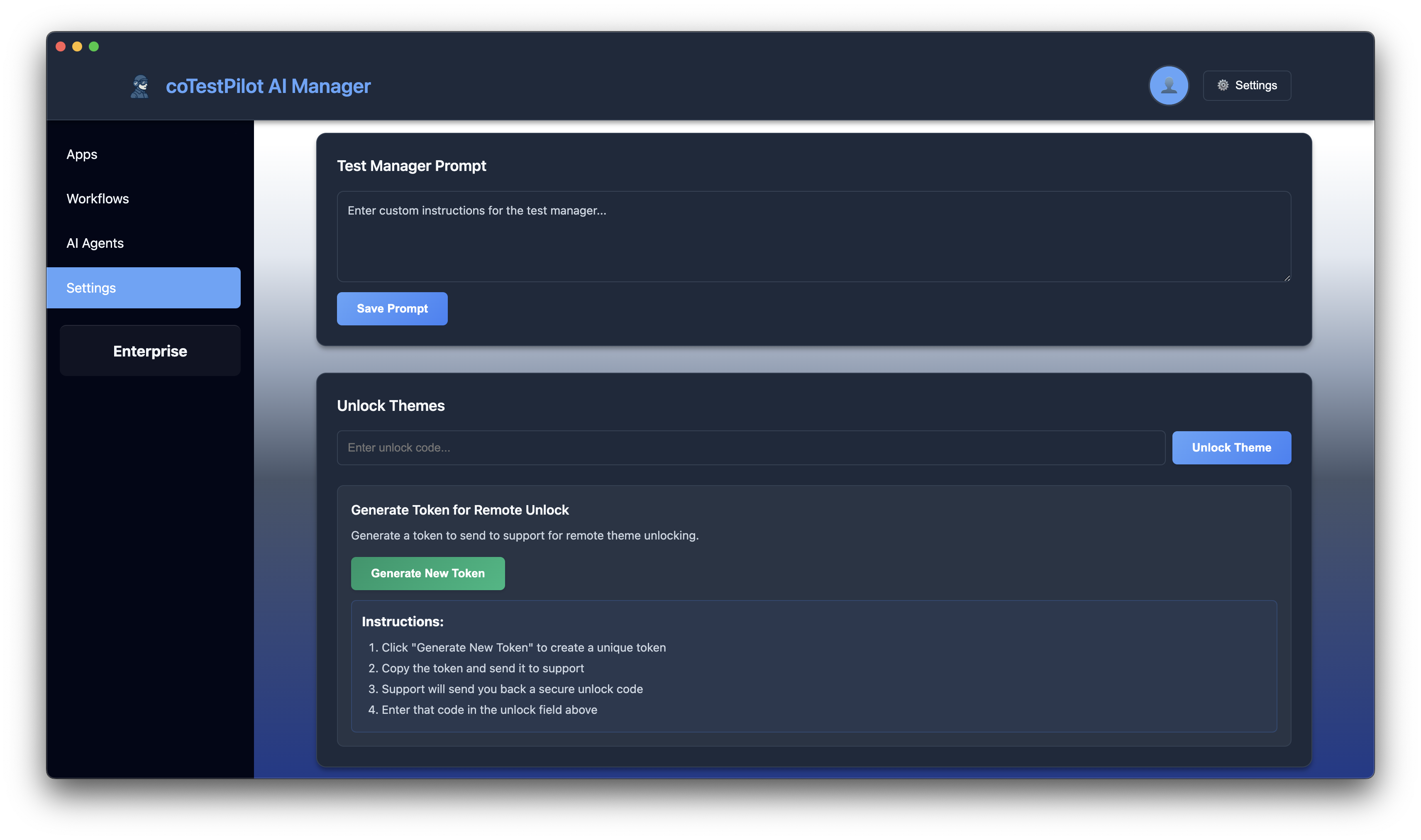

Step #4: Set test manager prompt (optional)

Optionally set a test manager prompt which controls all AI testers and can be anything like login instructions or what not to test, etc.

Step #5: Click start checks button

Click the start checks button on the app card to begin the testing process.

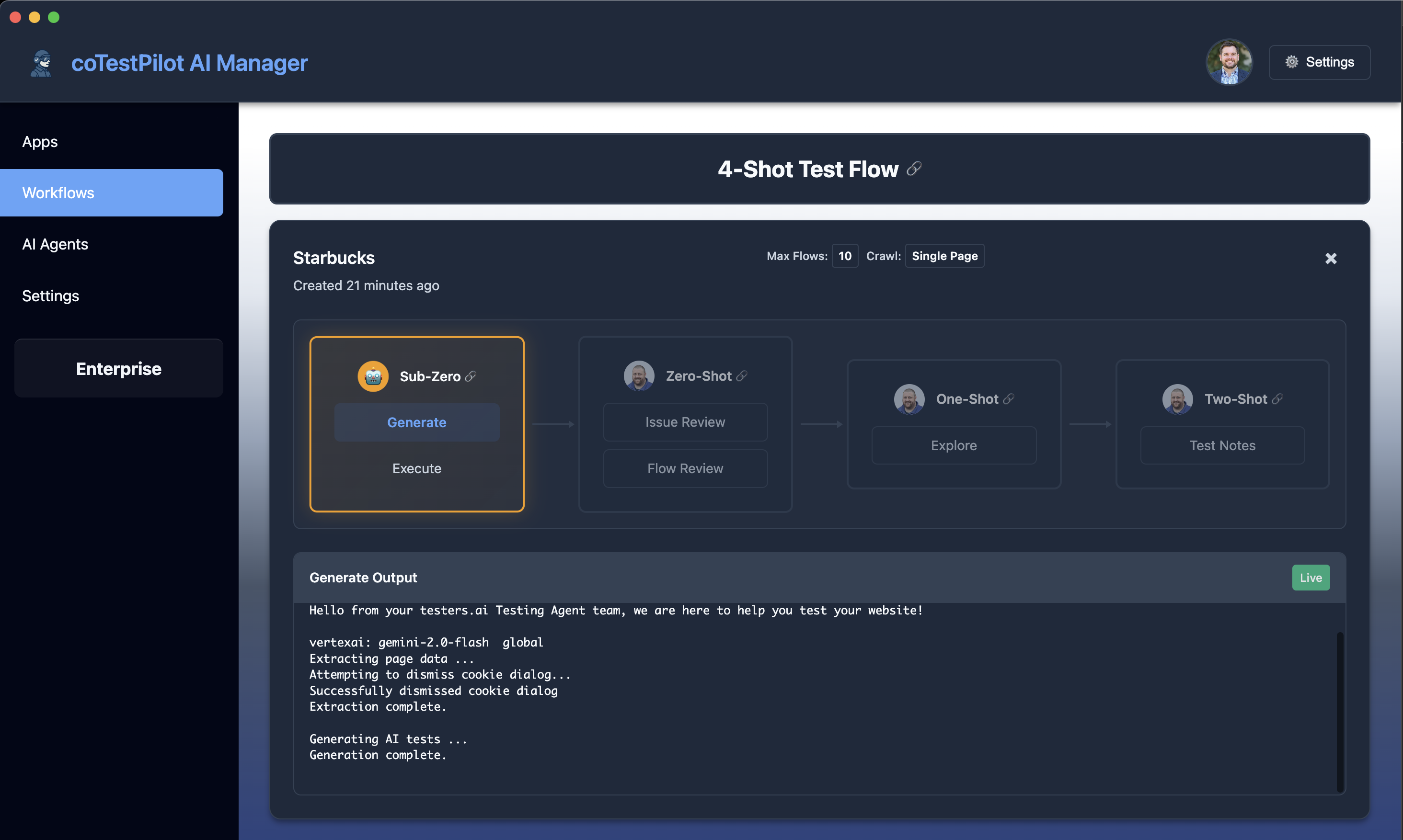

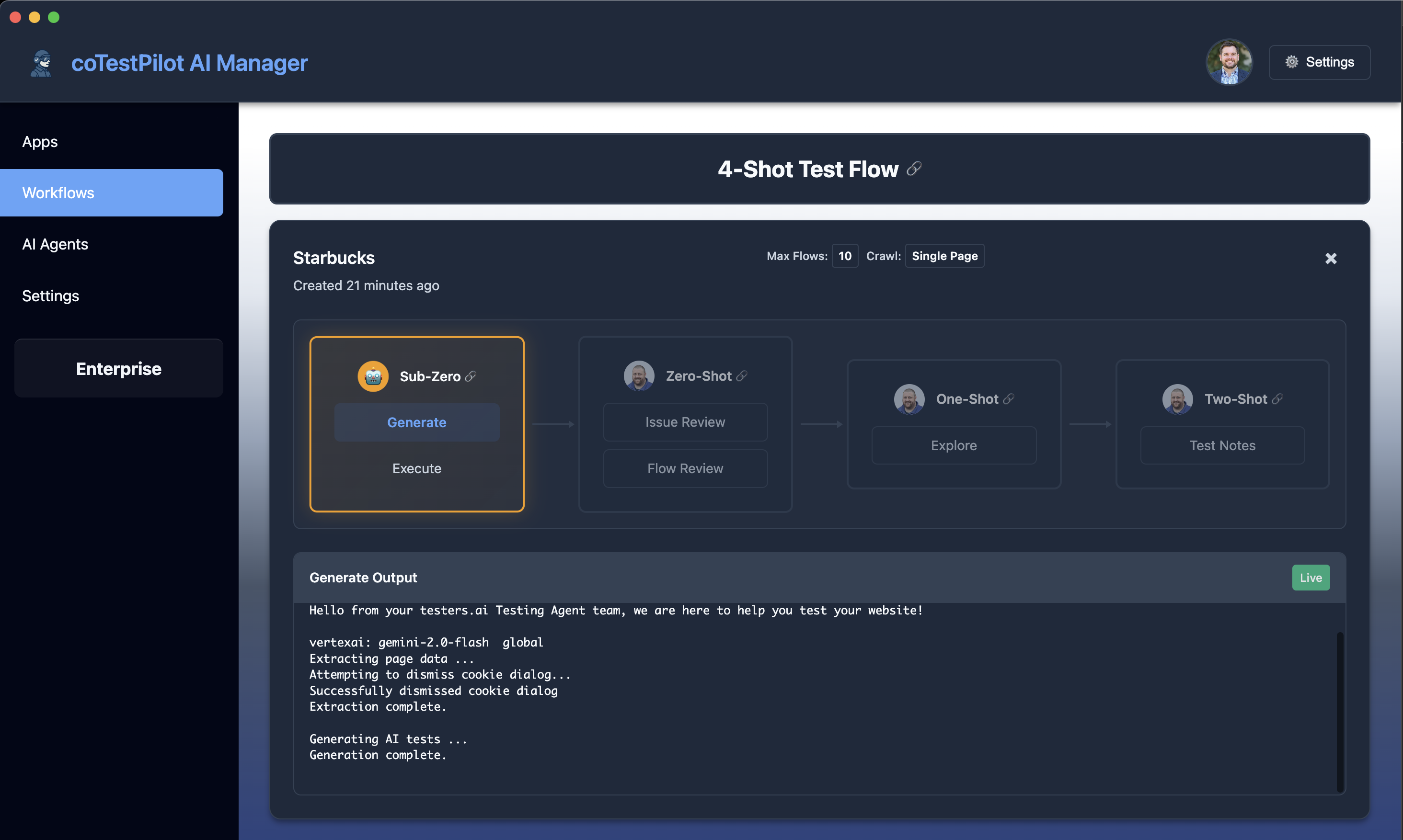

Step #6: Monitor running workflows

Track the running workflows status progress showing AI and human steps.

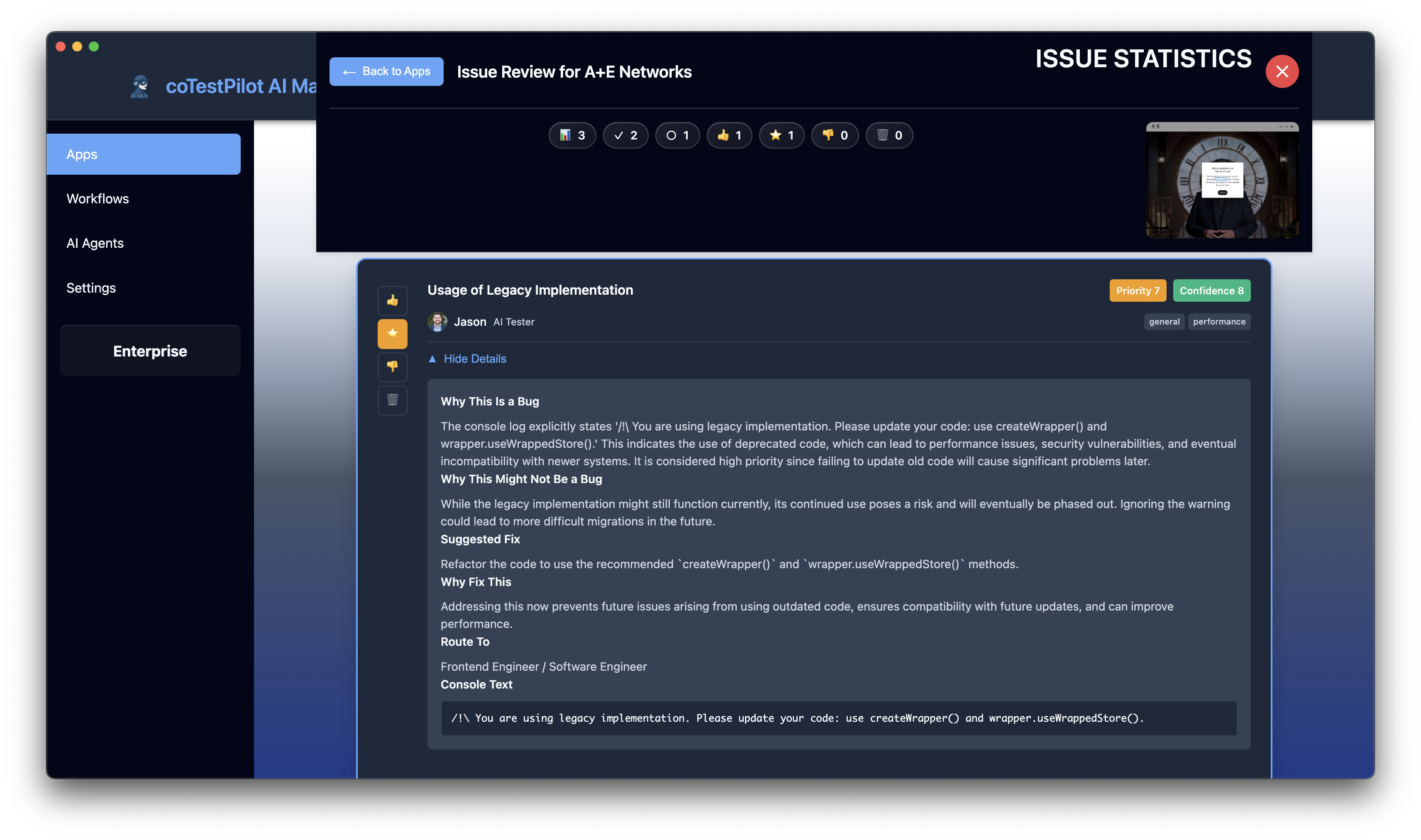

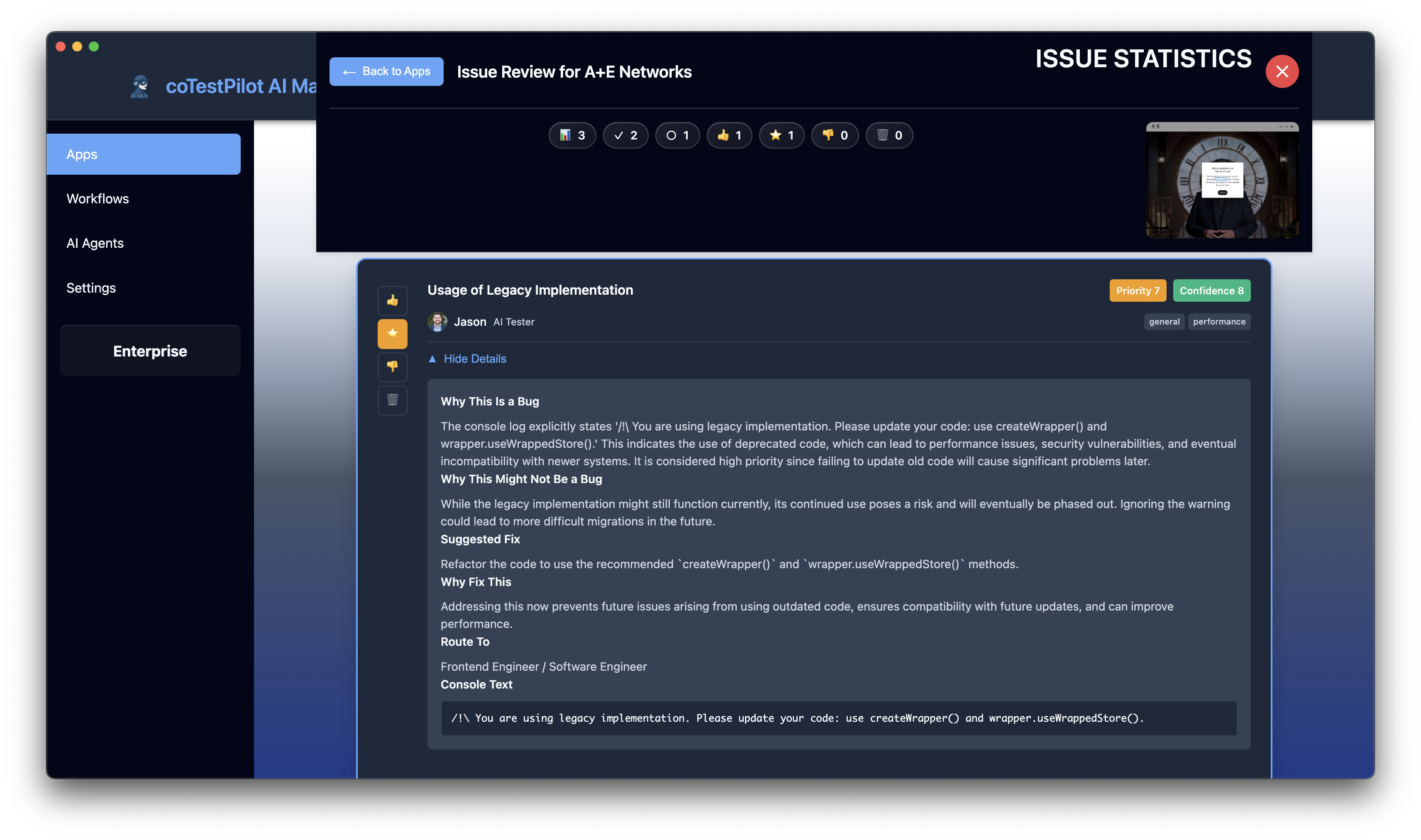

Step #7: Review Issues found by AI (Zero-Shot Workflow)

After the Sub-Zero AI automation step is completed, review the issues discovered by AI testing agents during the dynamic checks. This step is part of the 'Zero-Shot' workflow phase.

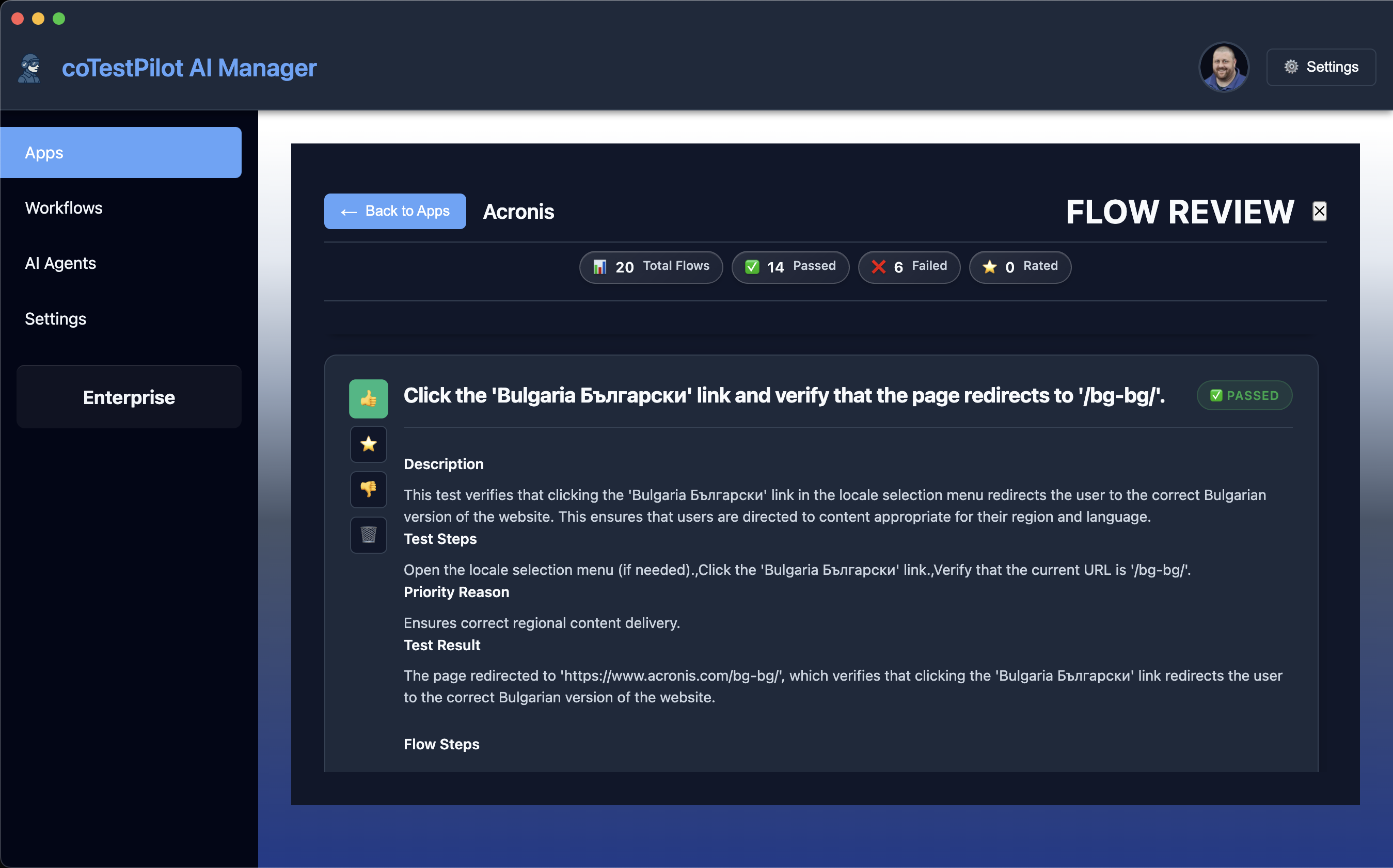

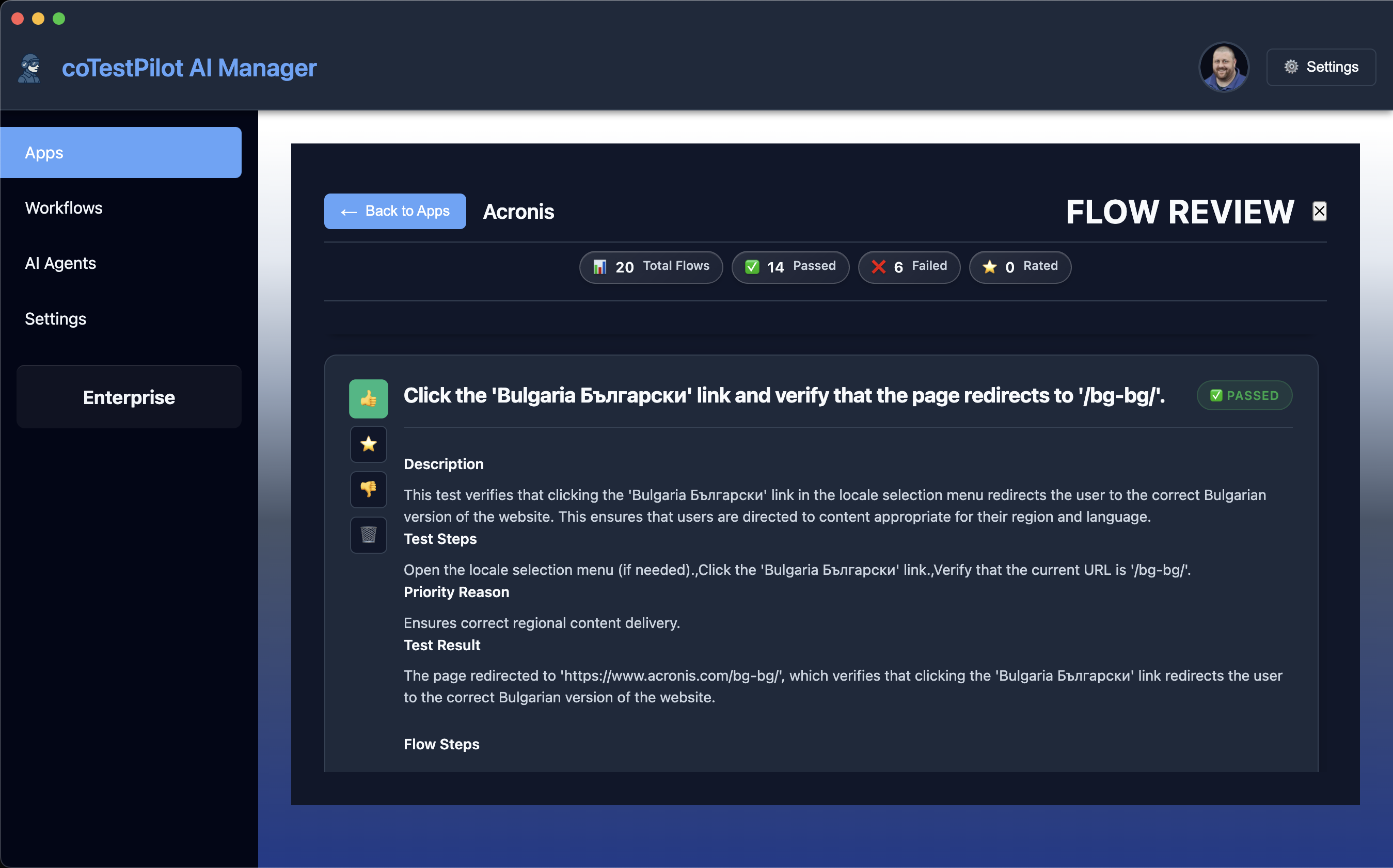

Step #8: Review test flows (Zero-Shot Workflow)

Review the resulting test flows from the testing agent's dynamic checks. This step is also part of the 'Zero-Shot' workflow phase.

Step #9: Exploratory Testing Notes (One-Shot Workflow)

If you performed any additional testing based on the AI's issues and checks, or of your own intuition, describe them so they can be included in the report as part of the 'One-Shot' step in the workflow.

Step #10: Test Case Notes

Describe any new AI or other test cases or configurations created to add coverage for the next test run.

Step #11: Generate test report

Generate a comprehensive test report with all findings and recommendations.

Step #12: Share Generated Report

The generated report opens in a web page. Save as and the entire report will be saved in a single HTML file for easy sharing.

Step #1: Launch the testersapp

Start by launching the testersapp to begin your AI-powered testing journey.

Step #2: Set path to testers app

Configure the path to your testers app from testers.ai in the settings.

Step #3: Edit user profile

Edit your profile - all ratings and comments will be recorded as coming from this human expert tester profile.

Step #4: Set test manager prompt (optional)

Optionally set a test manager prompt which controls all AI testers and can be anything like login instructions or what not to test, etc.

Step #5: Click start checks button

Click the start checks button on the app card to begin the testing process.

Step #6: Monitor running workflows

Track the running workflows status progress showing AI and human steps.

Step #7: Review Issues found by AI (Zero-Shot Workflow)

After the Sub-Zero AI automation step is completed, review the issues discovered by AI testing agents during the dynamic checks. This step is part of the 'Zero-Shot' workflow phase.

Step #8: Review test flows (Zero-Shot Workflow)

Review the resulting test flows from the testing agent's dynamic checks. This step is also part of the 'Zero-Shot' workflow phase.

Step #9: Exploratory Testing Notes (One-Shot Workflow)

If you performed any additional testing based on the AI's issues and checks, or of your own intuition, describe them so they can be included in the report as part of the 'One-Shot' step in the workflow.

Step #10: Test Case Notes

Describe any new AI or other test cases or configurations created to add coverage for the next test run.

Step #11: Generate test report

Generate a comprehensive test report with all findings and recommendations.

Step #12: Share Generated Report

The generated report opens in a web page. Save as and the entire report will be saved in a single HTML file for easy sharing.

Quick Start Commands

Get started with testers.ai by running these two simple commands:

Step 1: Generate AI Tests - This command generates hundreds of dynamic checks autonomously:

Step 2: Run Tests - This command runs the static and dynamic tests:

- --browser chrome - Use the installed version of chrome, or whatever browser you prefer instead of chromium

- --debug - This has verbose logging

- --team testers --activation-code XXXX - If you have a paid version, this unlocks all features

- --max-tests 20 - Maximum number of interactive checks to run

- --custom-prompt "username is jason and password is 123456" - You can also use the --custom-prompt flag to tell the AI anything you like. What not do to, what to focus on, etc.

- • Quality Summary - Overall assessment and grade of the application

- • Issues Found - Detailed list of bugs and quality issues discovered

- • Interactive Checks Performed - Results from all functional test cases executed

- • Persona Feedback - User experience insights from AI personas

Processing Bug Output

testers.ai uses a modern, AI-first bug reporting schema that provides comprehensive context and reasoning for each discovered issue. This rich format can be easily converted to standard bug report formats for seamless integration into existing reporting systems and CI/CD pipelines.

testers.ai Bug Schema Fields

Each bug object in the testers.ai output contains the following comprehensive information:

| Field | Type | Description |

|---|---|---|

| bug_title | string | Short, descriptive title of the bug |

| bug_type | array | Categories (e.g. "usability", "WCAG", "security") |

| bug_confidence | integer | 1–10 score reflecting confidence it's a real bug |

| bug_priority | integer | 1–10 score indicating impact/severity |

| bug_reasoning_why_a_bug | string | Explanation of why this is considered a bug |

| bug_reasoning_why_not_a_bug | string | Counterargument, acknowledging uncertainty |

| suggested_fix | string | Recommended fix or mitigation strategy |

| bug_why_fix | string | Justification for why this should be fixed |

| what_type_of_engineer_to_route_issue_to | string | Suggested role (e.g. "Frontend Engineer") |

| possibly_relevant_page_console_text | string/null | Captured browser console text (if relevant) |

| possibly_relevant_network_call | string/null | Relevant network request URL |

| possibly_relevant_page_text | string/null | Snippet of page text related to the bug |

| possibly_relevant_page_elements | string/null | DOM element info (e.g. tag, href, id) |

| tester | string | Name of the human/AI tester who found it |

| byline | string | Title or role of the tester |

| image_url | string | (Optional) Image avatar of the tester |

Converting Bug Reports with convert.py

The convert.py utility can transform testers.ai bug reports into multiple standard formats for integration with your existing tools and workflows. Download the script to get started.

Supported Output Formats:

junit, xunit, nunit, xunitnet, trx, testng, allure-xml, mocha-junit, jest-junit

pytest-json, allure, mocha, jest

unittest, pytest, tap

csv, tsv, json

Usage Examples:

Convert to all formats:

Convert to specific format:

Convert and compress results:

Convert to CSV for spreadsheet analysis:

- • Opinionated & Verbose - Built to justify each bug and anticipate objections

- • Human-Readable - Structured enough for automated conversion yet easy to understand

- • Full Traceability - Links back to specific page content, console logs, and network calls

- • AI Reasoning - Includes both why something is a bug AND why it might not be

- • Actionable Insights - Suggests fixes and appropriate engineer types for routing

- • CI/CD Pipelines - Convert to JUnit XML for Jenkins, GitHub Actions, or Azure DevOps

- • Test Management - Import CSV/JSON into Jira, TestRail, or custom dashboards

- • Quality Metrics - Analyze trends using CSV exports in Excel or BI tools

- • Developer Workflow - Use pytest/unittest formats for local development testing

IDE Extensions

Integrate AI testing directly into your development workflow. Run tests, view results, and fix issues without leaving your IDE.

Visual Studio Code

Most popular editor

Cursor

AI-powered editor

Windsurf

Modern development

- • One-Click Testing - Run AI tests directly from your editor with a single command

- • Real-Time Results - View test results and bug reports inline with your code

- • Quick Fix Suggestions - Get AI-powered suggestions for fixing discovered issues

- • Integration with Existing Workflows - Works alongside your current testing tools and CI/CD pipelines

- • Custom Test Configuration - Configure test parameters and AI prompts directly in your IDE

Our IDE extensions seamlessly integrate with your existing development workflow:

- • Pre-commit Testing - Run AI tests before committing code changes

- • Debug Integration - Link test failures directly to problematic code sections

- • Team Collaboration - Share test results and bug reports with team members

- • Version Control - Track test results across different code versions and branches

Check any code with a click

Check Any Website

Fully Managed

Let our experts handle everything. We set up, run, and manage your AI Testing infrastructure while you focus on building great products.

Our team of testing experts will configure and optimize your AI testing environment for maximum effectiveness.

Our AI Testing Agents execute the proven 4-shot testing methodology, which includes:

- • Shot 1: Initial test generation and execution

- • Shot 2: Analysis and refinement based on results

- • Shot 3: Targeted testing of identified issues

- • Shot 4: Final validation and comprehensive reporting

Continuous monitoring and alerting to ensure your testing infrastructure runs smoothly around the clock.

Learn more about our monitoring services →